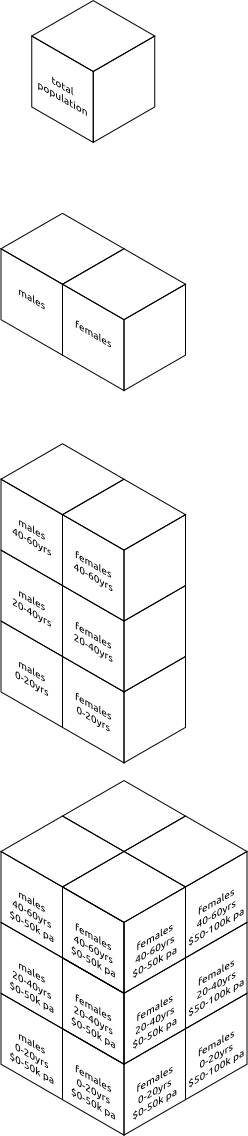

As part of my quest to georeference the old NSW Parish Maps, I ran into the ESRI World file format...

The Format

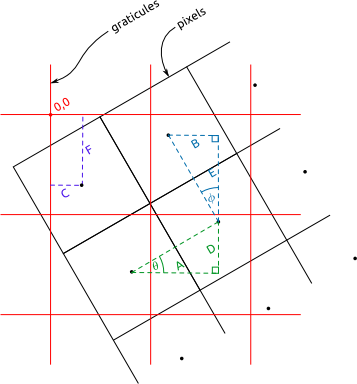

I relied on lot on http://en.wikipedia.org/wiki/World_file as a reference when figuring out how to make sense of world files. I remade the diagram from http://en.wikipedia.org/wiki/File:WorldFileParametersSchemas.gif, into two views: pixel centric, and graticule centric (svg versions here).

...and a difference case, where the graticules are rotated in the other direction,

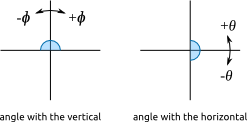

For the purposes of my java program (which I explain below), I define theta as the angle the east/west pointing graticules (I call them lat graticules as they are shown at regular lines of latitude) make with the horizontal, and phi as the angle the north/south pointing graticules (I call them long graticules as they are shown at regular lines of longitude) make with the vertical.

Keep in mind that the image coordinate system and projected coordinate system are different (assuming we are using some kind of UTM projection).

Writing to a Wld File

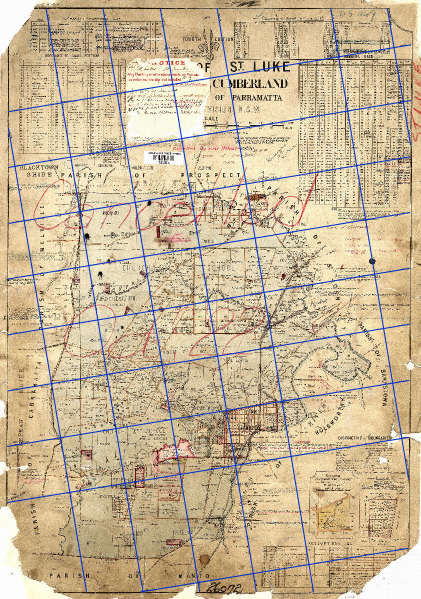

Some of the parish maps have graticules shown and a reference origin for the easting and northing values on the graticules. If we can extract this information we should be able to georeference the raster maps. Actually I'm not sure what projection is used... but I think using a zone of universal transverse mercator should be okay. Also I assume that the eastings and northings on the map are in chains.

The first step is extracting the graticules from the raster map to vectors. I do this by loading the image into Inkscape and tracing the graticules as line segments, with an svg path id for the segment something like "w220", for example to indicate west 220. After I have this svg file I run it through pmap-svggraticules2csv.pl which extracts these vector graticules from the svg file and saves them into a csv file.

[caption id="attachment_1275" align="aligncenter" width="421" caption="Example of vector graticles drawn over the raster map. Base map is Public Domain."] [/caption]

[/caption]

From the csv file I then can use my Java program graticules2wld to find a best fit world file (which is really just an affine transformation matrix) to georeference this raster image via a best fit approach.

An alternative is to use pmapgrid2gcps.pl to extract ground control points (GCPs) from the svg file by finding the intersection points of the graticules. You can then pass these gcps to GDAL, to either warp the image or use gcps2wld.py (from the Debian package python-gdal) to make a best fit world file from the gcps.

I've made a debian package for the graticules2wld program. The package was really hard to make, although in the end I finally did get it working. I ended up using jh_makepkg on just the source (i.e. using no external buildfiles, just the source code). If you want to make the debian package yourself you should be able to grab this directory, then under graticules2wld-0.1 run dpkg-buildpackage. If you are able to help me so that I'm not duplicating my code in this deb-source directory in the source tree, please help me.

The Next Step...

Half the point of using the world file, is so I can load the original image into JOSM and apply the affine transformation matrix (from the world file) to show the raster as a backdrop without having to warp the image unnecessarily. So my next step is to get JOSM to be able to open raster images with a world file and correctly place it as a backdrop in the editor window.